Robotic Framework for Neuroprosthetics

Contributor(s): |

|---|

Tatyana Dobreva |

David Brown |

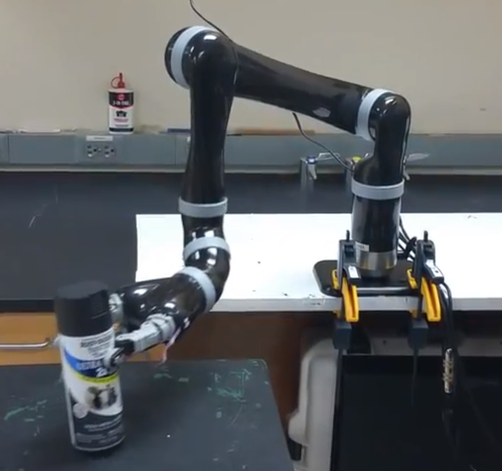

Coupling neural decoding and robotics allows us to go from thinking "I want to drink a cup of beer" to a robotic arm serving a cold beverage for consumption. The Jaco Robotic Arm Framework (JRAF) combines computer vision and robotic manipulation to detect objects in subject's environment, offer potential actions, and execute on selected intention.

Working at Richard Andersen's lab at Caltech, we designed and implemented a shared control robotic arm framework that takes decoded neural intent and translates it to actions that are executed by the arm. The framework consists of several modules:

-

Objection detection powered by Microsoft Kinect

-

JACO arm controller to send commands and receive sensory data

-

Trajectory planner to avoid collisions and determine the path for a specified action

-

Action handler to determine the available commands and execute them

Let's walk through an example!

-

The system is tasked with finding bottles and apples in the environment for the patient to interact with.

-

The object detection module detects an apple and a bottle.

-

The patient is presented with a list of potential actions: grab bottle, grab apple.

-

The neural decoder decodes the patient imagining "grasping a bottle" and selects "grab bottle"

-

The trajectory planner calculates a route to pick up the bottle and the arm controller executes it

-

Once the bottle is grasped, the action handler generates a new list of actions: bring bottle to mouth, return bottle, give bottle to caregiver

-

The neural decoder decodes the patient imagining "drinking" and selects "bring bottle to mouth"

-

The trajectory planner routes the robot arm to bring the bottle to the patient, allowing them to drink

Objection detection was built with algorithms from PCL.

The modules were developed using ROS.

For robot arm actuation we used Kinova's JACO API.

Writings

News

How a mind-controlled robotic arm paved the way for Caltech's new neuroscience institute

December 6, 2016

Sometimes the biggest gifts arrive in the most surprising ways. A couple in Singapore, Tianqiao Chen and Chrissy Luo, were watching the news and saw a Caltech scientist help a quadriplegic use his thoughts to control a robotic arm so that — for the first time in more than 10 years — he could sip a drink unaided.